In 2025, the AI chip landscape is witnessing transformative advancements that are supercharging edge computing and on-device intelligence. Leading this charge are Intel’s Panther Lake architecture and Apple’s M5 chip, two cutting-edge hardware solutions redefining how artificial intelligence workloads run closer to the user — faster, more efficiently, and with greater privacy.

This blog dives deep into the technical innovations, industry impact, and future-facing possibilities of these AI chips. It explains how neural accelerators, 3nm process technology, unified memory architecture, and next-gen GPU cores from Panther Lake and M5 empower real-time AI across devices ranging from laptops and tablets to smart glasses and industrial edge nodes. By the end, you’ll understand why 2025 marks a new era for AI hardware designed for edge intelligence.

The AI Chips Revolutionizing Edge Computing in 2025

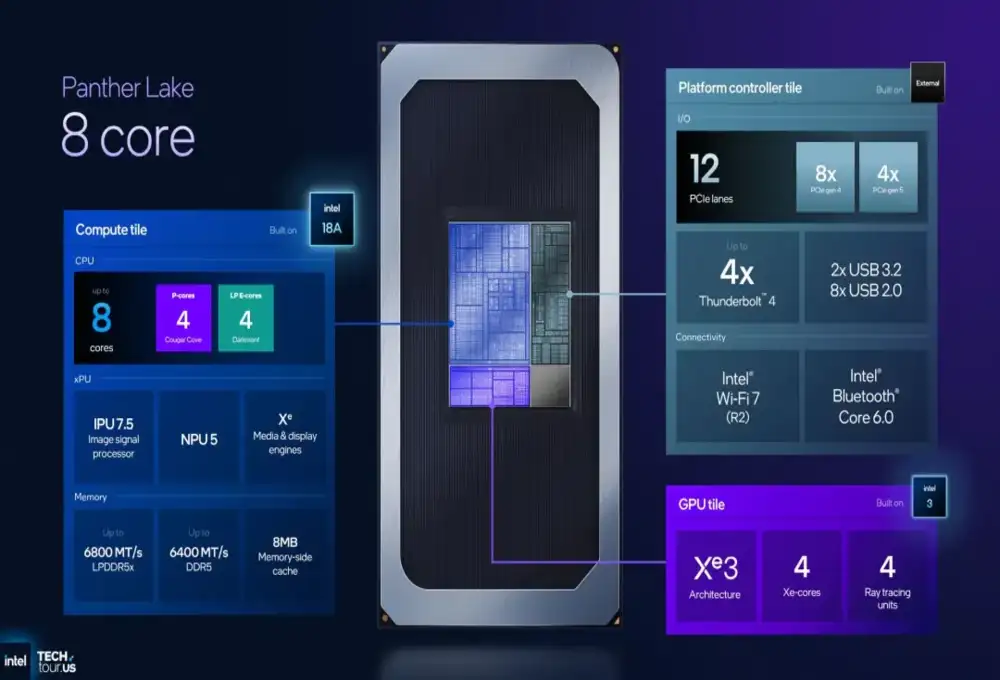

Intel Panther Lake: The First AI PC Platform Built on 18A Technology

Intel unveiled Panther Lake as an unprecedented step forward in AI computing hardware for personal computers. Built on the industry’s leading 18-angstrom (1.8 nm) fabrication process, Panther Lake integrates highly efficient neural accelerators alongside powerful CPU and GPU cores. This heterogeneous architecture is optimized for AI workloads like machine learning model inference, neural network acceleration, and real-time data processing right on the device.

Key features of Panther Lake include:

- Neural accelerators embedded in GPU cores: These accelerators handle AI inference tasks rapidly while offloading work from the general-purpose CPU.

- XPU architecture: Unifies CPU, GPU, and AI accelerators on a single chip, enabling seamless context switching for complex AI and graphics workloads.

- Advanced 3D chiplet packaging: Improves cooling and increases performance density.

- High unified memory bandwidth: Critical for AI models to access data streams quickly and avoid bottlenecks.

- Enhanced ray tracing capabilities: Supports visually rich AI applications and simulations.

Because Panther Lake chips are tailored for PC platforms, they are expected to power not only general consumer laptops but also high-performance workstations used by developers, researchers, and creatives leveraging AI applications daily.

Apple M5 Chip: Four-Times GPU Power and New Neural Engine

Apple’s M5 chip builds on its reputation for tight integration between hardware and software to deliver the most powerful AI experience yet in consumer devices. Fabricated on advanced 3nm technology, the M5 combines a 10-core CPU and a 10-core GPU with a new neural engine architected specifically for AI workloads.

Standout features of Apple’s M5 include:

- New 16-core Neural Engine: Designed to deliver 4x peak machine learning performance over the previous generation, enabling applications such as real-time language translation and advanced image processing directly on-device.

- Unified memory architecture: Maximizes data transfer speeds between CPU, GPU, and AI cores, minimizing latency.

- Optimized for iOS and macOS: Ensures AI workloads run efficiently and seamlessly within Apple’s ecosystem.

- Support for complex models and frameworks: Enables developers to deploy sophisticated AI without cloud dependency.

- Energy-efficient design: Extends battery life in portable devices even under heavy AI workloads.

The M5 powers the latest iPad Pro models and is expected to become the heart of future MacBook Pros and other Apple silicon-based devices, pushing the boundaries of mobile and desktop AI.

Why Edge Computing Matters for AI

Edge computing means performing data processing directly on devices or near the data source instead of sending everything to centralized cloud servers. This approach is critical for real-time AI applications that demand low latency, enhanced privacy, and offline capability. AI chips like Panther Lake and M5 are designed specifically to harness the benefits of edge intelligence.

Key Advantages

- Reduced latency: AI-powered decisions happen instantly with no round-trip delays to cloud servers.

- Data privacy: Sensitive data can remain securely on-device rather than being transmitted over networks.

- Bandwidth savings: Less data transfer reduces network congestion and associated costs.

- Energy efficiency: Local AI processing can be optimized to conserve battery life and reduce energy consumption.

- Reliability: Edge AI systems remain operational even during network outages.

With AI increasingly embedded in consumer electronics, industrial IoT, autonomous vehicles, and healthcare devices, high-performance AI chips at the edge enable smarter, faster, and more responsible artificial intelligence.

Innovations Driving AI Chip Performance

Neural Accelerators and AI GPU Cores

Both Intel and Apple integrate dedicated neural accelerators — specialized circuits optimized for deep learning operations like matrix multiplications and convolutions that dominate AI workloads. This hardware offloads inference and training tasks from general CPUs, boosting speed and efficiency.

In Panther Lake, accelerators reside inside GPU cores, enabling synergy between AI and graphics processing ideal for mixed workloads such as computer vision augmented reality. Apple’s M5 neural engine provides a high number of cores dedicated solely to AI, maximizing parallel processing and throughput for machine learning tasks on mobile devices.

3nm Process Technology

The cutting-edge 3nm fabrication process used in M5 and approaching in Panther Lake chips enables transistor packing at unprecedented density. This shrink translates into:

- Higher performance in smaller chips

- Reduced power consumption

- Improved thermal characteristics allowing sustained high-frequency operation

These improvements allow AI hardware to deliver intensive computations without excessive heat or battery drain.

Unified Memory Architecture

AI workloads benefit greatly from unified memory bandwidth that allows multiple processors (CPU, GPU, AI cores) to seamlessly access shared data pools. Both Panther Lake and M5 chips feature high bandwidth interfaces minimizing data movement delays, perfect for real-time AI models processing multiple data streams simultaneously.

Chiplet Architecture and Cooling

Intel’s 3D chiplet packaging technology stacks multiple processor dies vertically, increasing performance density while enhancing power efficiency. Advanced cooling solutions are critical to maintaining performance under AI workload demands without thermal throttling.

Real-World Applications Empowered by Next-Gen AI Chips

Consumer Devices

- Smartphones and tablets with Apple M5 experience faster photo editing using neural filters, smarter voice assistants, and enhanced augmented reality.

- Laptops with Intel Panther Lake can run local AI-driven development tools, creative suites, and gaming experiences with superior graphics and inferencing capabilities.

Industrial and IoT

- Real-time AI analytics on factory floors accelerate process optimizations.

- Autonomous robots and smart cameras gain improved perception and decision-making powered at the edge.

Healthcare

- AI-enabled wearable devices provide continuous monitoring and instant health insights without cloud dependency.

Automotive

- Next-gen driver assistance systems process sensor fusion and make on-the-fly decisions enabled by edge AI hardware.

The Competitive Landscape and Market Trends

Intel and Apple represent two distinct but converging approaches: Intel’s heterogeneous XPU strategy targets versatile AI-enabled PCs, while Apple tightly integrates hardware-software stacks for optimized on-device AI in mobile-first ecosystems.

The demand for AI chips is exploding, with the edge AI chip market projected to reach multi-billion dollar valuation by 2026 due to growing adoption in multiple sectors. Competition among chipmakers like Nvidia, Qualcomm, and AMD continues to drive innovation pace.

More information: Oracle AI Database 26ai: The Future of AI Integration in Data Systems

Challenges and Future Outlook

Despite breakthroughs, challenges remain:

- Balancing power efficiency with AI performance is a constant design tradeoff.

- AI software frameworks need to evolve to fully harness heterogeneous architectures.

- Supply chain constraints for advanced fabrication nodes impact availability.

Looking ahead, we expect:

- Wider adoption of chiplet-based scalable AI architectures.

- Emergence of specialized AI cores tailored for domains like natural language or vision.

- Expanded integration of AI capabilities in wearables, AR/VR, and robotics.

Conclusion

Intel’s Panther Lake and Apple’s M5 chips are powerful catalysts propelling edge computing into a new era of on-device intelligence. Through innovations like neural accelerators, 3nm technology, and unified memory, these AI chips enable smarter, faster, and more energy-efficient AI applications on everything from laptops to mobile devices.

As organizations and consumers prioritize real-time AI, privacy, and seamless experiences, the synergy of state-of-the-art hardware and edge computing will shape the future of artificial intelligence for years to come.