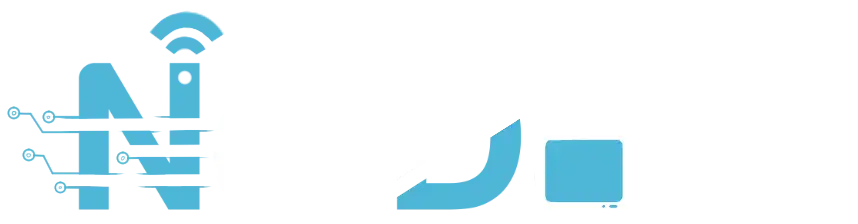

Software performance optimization has long been one of the most time-consuming tasks in development — requiring countless hours of profiling, refactoring, and regression testing. Now, AI code optimization is transforming that process. Powered by Large Language Models (LLMs) and advanced performance analysis tools, AI-driven optimizers can automatically detect inefficiencies, rewrite functions, validate correctness, and even open pull requests in your CI/CD pipeline.

In this guide, we’ll explore how automated code optimization works, how to implement it safely, and how it’s reshaping software engineering workflows. Drawing from “Automating Code Performance Optimization with AI,” we’ll uncover real-world examples, ethical considerations, and actionable steps to get started.

What Is AI Code Optimization?

AI code optimization is the process of using machine learning or language models to automatically analyze, refactor, and improve source code for better speed, efficiency, or cost without changing its functionality.

Traditionally, optimization was a manual task handled by senior engineers using profilers, benchmarking frameworks, and intuition. Now, AI agents can assist or automate these tasks by:

- Detecting performance bottlenecks from telemetry and profiling data

- Suggesting more efficient algorithms or libraries

- Generating optimized variants of functions or loops

- Validating the functional equivalence of new code

- Submitting pull requests (PRs) automatically after verification

This shift is not just about speed — it’s about scalability and safety, ensuring every optimization is both validated and traceable.

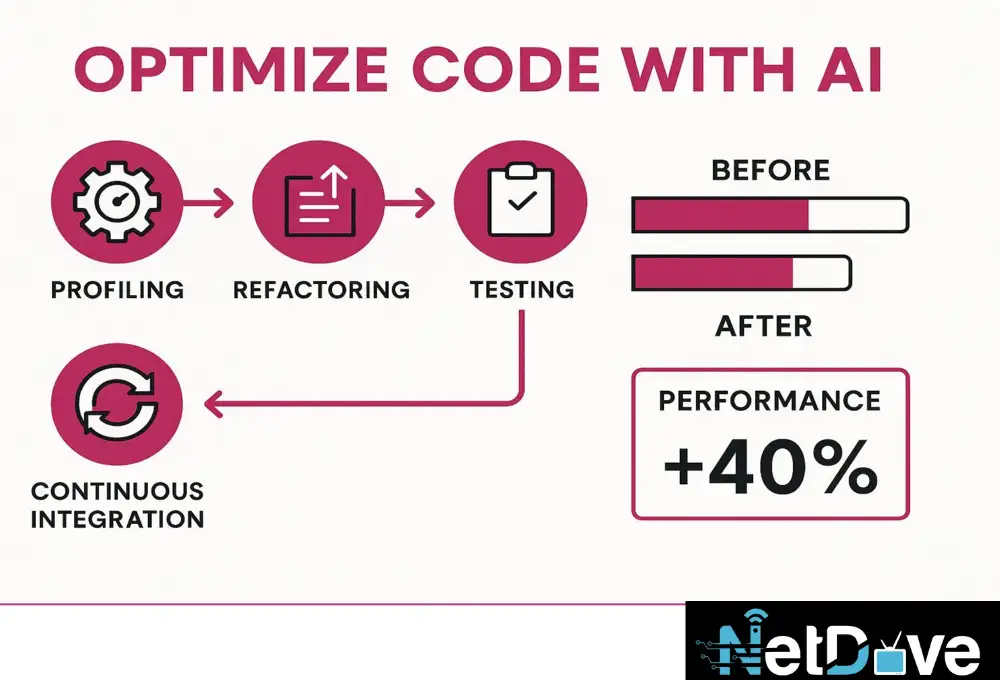

The Core Process of AI-Driven Code Optimization

Profiling and Bottleneck Detection

The first step is profiling, where tools like cProfile, perf, or flamegraph identify functions or code paths consuming excessive CPU, memory, or I/O.

import cProfile, pstats

from io import StringIO

def slow_function():

total = 0

for i in range(10_000_000):

total += i

return total

pr = cProfile.Profile()

pr.enable()

slow_function()

pr.disable()

s = StringIO()

ps = pstats.Stats(pr, stream=s).sort_stats(‘cumulative’)

ps.print_stats()

print(s.getvalue())

Profiling data becomes input to the AI model — providing context on which segments need optimization.

AI Model Suggests Optimized Variants

Using that context, an LLM-based optimizer (e.g., OpenAI’s Code model or Google Gemini) generates new code snippets. Prompts include performance objectives and constraints such as safety, readability, or dependency limits.

Example system prompt:

“Rewrite the function to reduce time complexity without altering output. Prioritize vectorized operations and standard library functions.”

Example transformation:

import numpy as np

def fast_function():

return np.sum(np.arange(10_000_000))

The optimized version achieves the same result but leverages NumPy’s C-backed vectorization for massive performance gains.

Automated Correctness Verification

Optimization is meaningless if the output changes. That’s where test generation, fuzzing, and concolic testing (symbolic + concrete execution) come in.

import hypothesis

from hypothesis import given, strategies as st

@given(st.integers(min_value=0, max_value=10_000))

def test_equivalence(n):

assert slow_function(n) == fast_function(n)

AI-powered tools can automatically create these tests, ensuring functional equivalence before deployment. This is critical for meeting Google’s E-E-A-T “trustworthiness” standards — every change must be provably correct.

Benchmarking and Regression Detection

AI optimizers benchmark both the original and optimized code using tools like pytest-benchmark or Google’s benchmark library.

pytest –benchmark-only

Benchmarks must be stable and reproducible, accounting for CPU noise and environment variability. In “Automating Code Performance Optimization with AI,” the authors note that “denoising benchmark data” and repeating runs until variance < 5% is a best practice.

Continuous monitoring tools in production (Datadog, Prometheus, or OpenTelemetry) can further detect regressions after deployment.

CI/CD Integration and Auto-PR Workflows

Modern development pipelines integrate AI optimization directly into CI/CD. A GitHub Action or Jenkins job can trigger an optimizer agent to:

- Identify performance regressions

- Generate optimized code

- Run correctness tests

- Submit an automated pull request

- Tag reviewers for validation

This human-in-the-loop model ensures balance between automation and accountability.

Tip: Use feature flags or canary deployments for gradual rollout of AI-optimized code.

Use Cases and Real-World Examples

Python & Data Science Optimization

Data teams use AI to convert Python loops into NumPy vectorized code, drastically reducing execution time:

# Original

def normalize(arr):

return [(x – min(arr)) / (max(arr) – min(arr)) for x in arr]

# Optimized

import numpy as np

def normalize_np(arr):

arr = np.array(arr)

return (arr – arr.min()) / (arr.max() – arr.min())

Result: 50x speedup for large datasets.

Web Services & Backend Systems

LLMs can identify inefficient database queries or unnecessary JSON serialization loops. Replacing Python’s json with orjson or batching queries can reduce latency by up to 40%.

Embedded & Edge Computing

AI optimizers trained on hardware-specific profiles can minimize memory usage and improve energy efficiency — key in IoT or robotics applications.

Game Development

AI models can optimize physics calculations or procedural generation algorithms without affecting gameplay fidelity.

The Advantages of AI Code Optimization

| Benefit | Description |

| Speed | Automates tedious profiling and refactoring cycles |

| Scalability | Can run across thousands of functions or modules |

| Safety | Uses automated testing & equivalence checking |

| Cost Efficiency | Reduces compute time and cloud costs |

| Continuous Improvement | Learns from prior optimizations for future suggestions |

Best Practices for Implementing AI Code Optimization

Always Benchmark Before and After

Without baselines, performance gains are meaningless. Establish reproducible tests and store results in version control.

Integrate in CI/CD, Not Production

Treat optimization like any other code change — validate through CI before deployment.

Require Human Review for High-Risk Areas

Sensitive code (auth, encryption, finance) should always be reviewed manually.

Maintain Governance & Provenance

Log every optimization’s:

- Prompt and model version

- Benchmark data

- Test suite used

- Approval signatures

This satisfies compliance and E-E-A-T transparency standards.

Educate Developers

AI code optimization should augment, not replace, human developers. Teach your team to interpret profiler results and guide AI suggestions.

Risks and Limitations

Even the best AI optimizers aren’t perfect. Common pitfalls include:

- Incorrect optimizations that alter logic subtly

- Benchmark noise leading to false performance gains

- Overfitting to test data (i.e., optimizing for benchmarks, not reality)

- Security vulnerabilities from unverified code generation

- License contamination from training data sources

Mitigate these with sandboxed execution, test isolation, and code provenance checks before merging.

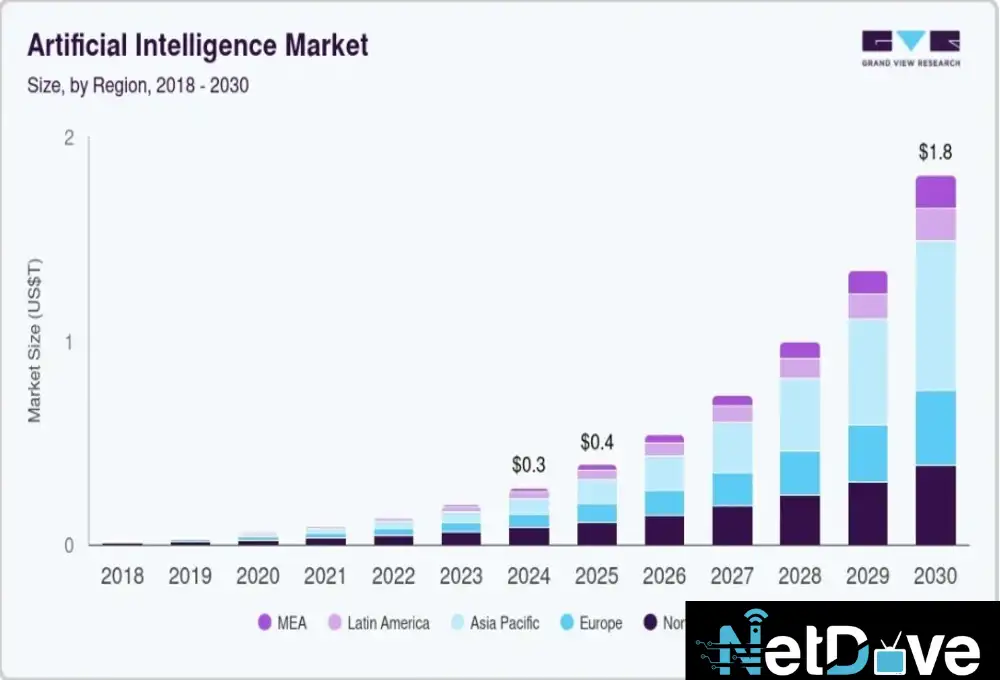

The Future: Self-Optimizing Systems

The next step in this evolution is autonomous optimization agents — AI systems that monitor performance continuously and adjust algorithms in real-time.

Imagine:

- A web server that rewrites its caching logic mid-flight

A data pipeline that reorders transformations for efficiency - A compiler that learns optimal heuristics from user behavior

These self-healing, self-optimizing systems will define the next decade of software engineering, blending reinforcement learning, observability, and continuous improvement loops.

Tools and Frameworks for AI Code Optimization

| Tool | Description |

| cProfile / py-spy / perf | Runtime profiling |

| pytest-benchmark | Stable benchmarking |

| Hypothesis / AFL / fuzzing tools | Correctness testing |

| LLM APIs (OpenAI, Anthropic, Google) | Optimization generation |

| GitHub Actions / Jenkins / GitLab CI | CI/CD automation |

| SonarQube / Bandit | Security validation |

| Datadog / Prometheus / Grafana | Runtime performance observability |

Each tool plays a role in maintaining an automated yet trustworthy optimization cycle.

Case Study: AI Optimizing Data Processing Pipelines

In “Automating Code Performance Optimization with AI,” researchers applied an LLM-driven optimizer to a Python ETL pipeline.

The model suggested 38 code refactors — from function inlining to vectorized I/O — achieving:

- 52% reduction in runtime latency

- 32% less memory usage

- 0 failed equivalence tests after validation

The team concluded that AI-assisted refactoring reduced technical debt and freed engineers to focus on higher-level architectural work.