In the fast-paced world of software delivery, the DevOps revolution has already redefined how organizations build, test, and release code. But with the increasing complexity of microservices, multi-cloud architectures, and continuous delivery pipelines, traditional testing methods are struggling to keep pace.

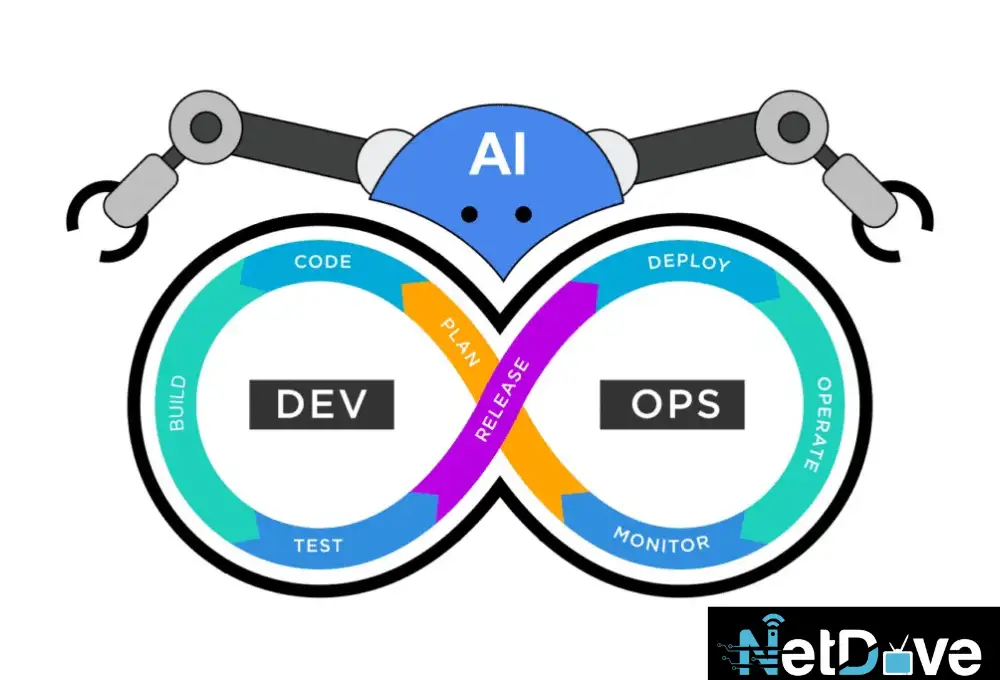

Enter AI-driven DevOps — a transformative approach that integrates machine learning and automation into every stage of the DevOps lifecycle. It enables faster defect detection, intelligent prioritization, and continuous quality assurance, all while reducing human effort and cost.

This is not just automation — it’s autonomous quality engineering, where AI systems predict, adapt, and optimize in real time.

What Is AI-Driven Testing in DevOps?

AI-driven testing is the application of artificial intelligence and machine learning algorithms to automate and optimize the testing lifecycle — from test creation and execution to defect analysis and reporting.

Unlike conventional test automation tools, AI-driven systems learn from historical test data, production logs, and code repositories, making them capable of:

- Predicting high-risk modules before release.

- Automatically generating relevant test cases.

- Detecting flaky or redundant tests.

- Enhancing coverage with minimal human input.

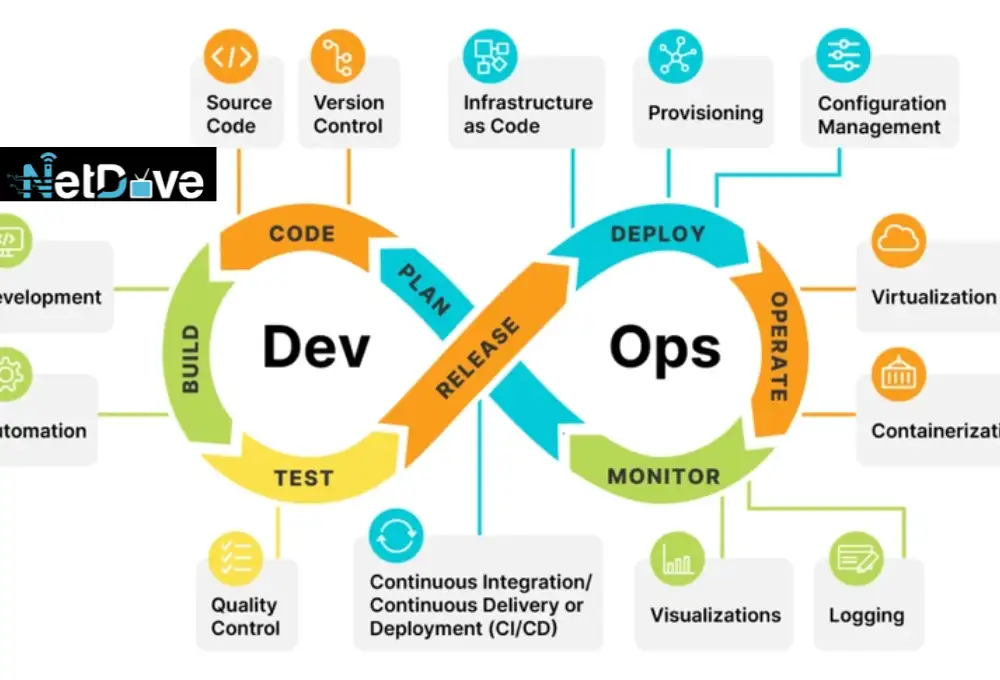

AI’s Role Across the DevOps Testing Cycle

| DevOps Phase | AI Application | Example Tools |

| Plan | Risk prediction based on commit history | GitHub Copilot, CodeGuru |

| Code | Static code analysis, defect prediction | DeepCode, SonarQube AI |

| Test | Test generation, prioritization, self-healing | Testim, Mabl, Functionize |

| Release | Predictive deployment validation | Launchable, Harness.io |

| Monitor | AI observability and anomaly detection | Dynatrace, Datadog AI |

Why DevOps Needs AI-Driven Testing

Modern DevOps teams deploy code hundreds or thousands of times a day. Yet, the number of manual test cases or static automation scripts often can’t scale proportionally.

Key Pain Points:

- Flaky tests slow down CI/CD pipelines.

- Long feedback loops delay releases.

- Limited coverage in dynamic environments.

- Reactive testing instead of predictive QA.

AI’s Solution:

AI brings predictive intelligence to testing — it doesn’t just automate, it anticipates.

Machine learning models trained on code commits, bug reports, and runtime data can predict failure probability before tests even run.

This paradigm shift allows teams to move from “shift-left” testing to “shift-everywhere,” where quality is infused continuously across the pipeline.

The Core Components of AI-Driven Testing Pipelines

Intelligent Test Generation

AI models analyze existing code bases and user behavior to automatically generate test cases.

For instance:

# Example: AI-generated unit test suggestion

def test_payment_api_handles_invalid_card():

response = payment_api.process(card_number=”0000-0000-0000-0000″)

assert response.status_code == 400

By understanding patterns in historical data, these systems can identify missing edge cases human testers might overlook.

Test Prioritization and Risk Prediction

Using clustering algorithms, AI identifies which tests should run first based on:

- Recent code changes

- Developer activity patterns

- Historical defect density

This ensures critical paths are validated faster, reducing feedback loops.

Self-Healing Test Automation

In CI/CD environments, small UI or API changes often break test scripts.

AI-based frameworks use computer vision and reinforcement learning to automatically update locators or flows, preventing test failures.

Autonomous Root Cause Analysis

AI correlates failure logs, stack traces, and code diffs to pinpoint the root cause of issues — sometimes even suggesting fixes.

For example, a machine learning system can analyze 100+ build logs and detect that 80% of test failures stem from a single configuration error.

Integrating AI into the DevOps Toolchain

Step 1: Data Aggregation

Collect high-quality data from:

- CI/CD logs (Jenkins, GitHub Actions)

- Code repositories (GitLab, Bitbucket)

- Test results and defect databases (Jira, TestRail)

The cleaner the data, the more accurate the AI predictions.

Step 2: Model Training

Use historical data to train models for:

- Test selection

- Risk prediction

- Anomaly detection

Teams can use frameworks like TensorFlow, PyTorch, or even AutoML platforms for faster prototyping.

Step 3: Continuous Learning

AI in DevOps is not a “set and forget” system. The model must retrain automatically as the codebase evolves to prevent drift.

Step 4: Feedback Loop Automation

Integrate AI outputs into CI/CD pipelines using webhooks and APIs.

Example:

# GitHub Actions: AI-based test prioritization step

jobs:

ai_test_run:

steps:

– name: Fetch risk scores

run: python ai_risk_model.py

– name: Run high-priority tests

run: pytest –select high_risk_tests.json

Benefits of AI-Driven DevOps – Testing

Speed & Efficiency

AI automates repetitive testing tasks, cutting test cycle times by up to 70%.

Smarter Test Coverage

Machine learning uncovers hidden dependencies and increases test depth.

Reduced Costs

Fewer human testers needed for routine checks; resources focus on exploratory testing.

Predictive Quality

Identify and address issues before they reach production.

Continuous Improvement

AI learns from every build, making each iteration more efficient and accurate.

Real-World Use Cases

Netflix: Predictive Test Selection

Netflix uses AI to select and prioritize tests based on recent code changes, cutting down test times without sacrificing reliability.

Google: Flaky Test Detection

Google’s internal tools use machine learning to identify and quarantine flaky tests automatically.

Microsoft: Code Coverage Optimization

Microsoft applies reinforcement learning to dynamically choose which tests to execute for the most coverage per build minute.

Salesforce: Intelligent Test Maintenance

Salesforce integrated AI agents that automatically repair broken test scripts when UI elements change.

Challenges & Ethical Considerations

AI testing isn’t magic — it faces real-world constraints:

- Data Quality Bias: Poor data leads to unreliable predictions.

- Explainability: AI-generated results can be opaque.

- Over-automation Risk: Too much reliance on AI can reduce human oversight.

- Security & Compliance: Training data must adhere to data privacy and governance standards.

Balancing automation with accountability ensures that DevOps remains responsible and transparent.

Future Trends: Towards Autonomous DevOps

AI testing is the foundation for a larger evolution — Autonomous DevOps, where:

- Pipelines optimize themselves in real time.

- AI agents make deployment and rollback decisions autonomously.

- Testing becomes proactive rather than reactive.

The future DevOps engineer will act more like an AI orchestrator, guiding intelligent systems instead of manually scripting them.

Conclusion

AI-driven testing represents a seismic shift in how DevOps pipelines operate. By transforming reactive testing into predictive quality assurance, it reduces risk, accelerates delivery, and enhances reliability.

As enterprises scale, those who embrace AI testing early will gain not just speed, but strategic resilience — turning their DevOps pipelines into self-learning, adaptive systems.