The landscape of artificial intelligence (AI) is entering a new era where scale matters more than ever. In 2025, tech giants and startups are pushing the boundaries of model scale, with some developing trillion-parameter models while others focus on cost-efficient architectures. Among the most notable are Ant Group’s Ling-1T, a trillion-parameter language model designed to revolutionize AI capabilities, and DeepSeek R1, a more budget-friendly, yet powerful, large language model. This article explores the intricacies of this escalating competition, the technological innovations behind these models, and their implications for the AI ecosystem.

The Rise of Massive Language Models

Over the past few years, AI research has transitioned into an arms race of model size. The larger the model, the more capable it becomes at understanding complex language, reasoning, and multimedia tasks. In 2025, models with over a trillion parameters are no longer just prototypes—they are being deployed for real-world applications ranging from natural language understanding to multi-modal reasoning.

Why Model Scale Matters

Increasing the number of parameters in a model directly correlates with its ability to capture intricate patterns in data, deliver nuanced responses, and perform complex reasoning. However, scaling also introduces significant challenges, including exorbitant training costs, energy consumption, and infrastructure requirements.

The competition is now centered on balancing these factors — maximizing capacity while minimizing costs — which has led to breakthroughs in model architecture, training efficiency, and data utilization.

Ant Group’s Ling-1T: The New Paradigm in AI

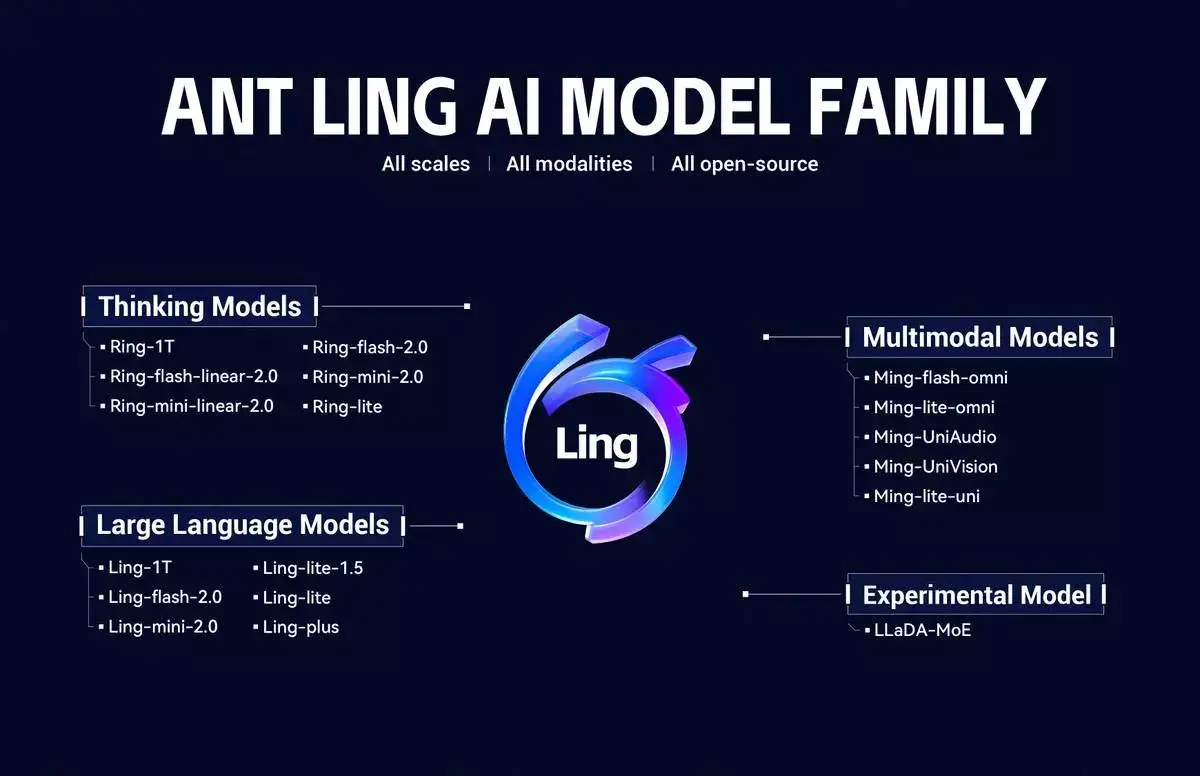

Ant Group, a leading fintech giant renowned for its innovations in digital finance, announced Ling-1T, a trillion-parameter language model designed to set new standards in AI performance. Built on open-source principles, Ling-1T leverages advanced architecture, enabling it to excel in code generation, logical reasoning, and mathematical problem-solving.

Key Features of Ling-1T

- Trillion Parameters: The largest open-source language model launched so far, rivaling industry giants.

- Open-Source License: Licensed under MIT, making it accessible for researchers, developers, and enterprises worldwide.

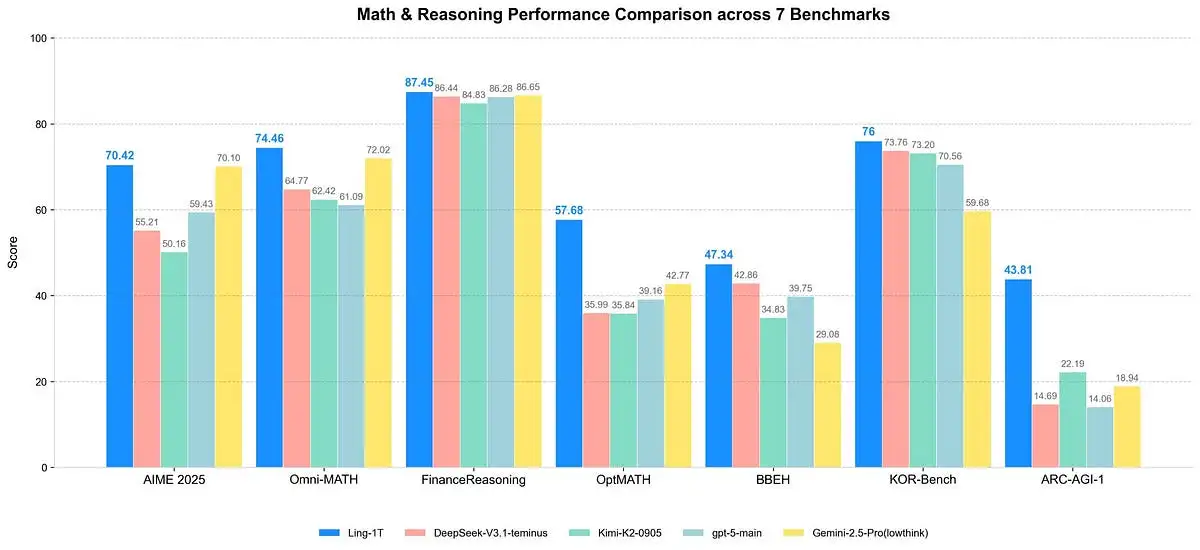

- High Performance: Achieved 70.42% accuracy on the AIME 2025 benchmark, currently leading in open-source model rankings.

- Multilingual and Multimodal Capabilities: Designed to handle language, images, and other data types, enabling cross-modal reasoning.

- Specialized Series: Includes non-thinking models for language processing, reasoning models, and multimodal models.

Ant Group’s Ling-1T signifies China’s rapid advances in AI, challenging the dominance of Western tech multinational giants.

The Significance in the Industry

The development of Ling-1T exemplifies how scale and open access converge to democratize AI research and deployment. It signals a shift where access to million- or billion-dollar AI models can be democratized across different sectors, including finance, healthcare, and manufacturing.

DeepSeek R1: Cost-Effective Giants

While model size continues to grow, the associated costs of training such models become prohibitively expensive. Addressing this challenge, DeepSeek R1 has emerged as a cost-effective alternative capable of delivering high performance without the massive infrastructure overhead typical of trillion-parameter models.

Features of DeepSeek R1

- Optimized Architecture: Uses sparse attention mechanisms and hybrid precision training to reduce hardware needs.

- Cost-Efficiency: Achieves competitive results with fewer GPUs, reducing operational costs significantly.

- Flexible Deployment: Designed to run on smaller clusters, making it accessible to startups and research institutions.

- Powerful Reasoning: Capable of complex problem-solving and multi-step reasoning, comparable to larger models.

The R1 model exemplifies a growing trend: scaling down costs while maintaining an impressive degree of AI capability — an essential factor for commercial and academic adoption.

How DeepSeek R1 Is Disrupting the Market

The model’s ability to deliver large-scale reasoning and language capabilities at a fraction of the cost is disruptive. As AI architectures improve, smaller organizations now have a realistic pathway to deploy models that once only large corporations could afford. Cost-effective models like DeepSeek R1 open new horizons in AI democratization, innovation, and competition.

For more news, check at: https://netdave.com/ai-news-trends/

The Escalation of Model Scale: Why Now?

The driving force behind this escalating competition is the relentless pursuit of AI excellence. Larger models tend to perform better on benchmarking tests, but governments, industries, and startups are also pushing for affordability and energy efficiency — leading to innovation in training algorithms, hardware, and architecture.

Why Are Model Scale and Cost-Effectiveness Colliding?

- Hardware Breakthroughs: Advancements like Intel’s 18A process and Apple’s 3nm chips enable the construction of more powerful AI processors at lower costs.

- Software Innovations: Sparse attention and hybrid-precision training dramatically reduce the computation needed to train massive models.

- Open-Source Initiatives: Companies like Ant Group maintain open development to foster community-driven innovation.

- Market Pressure: Enterprises demand scalable AI capable of deployment on edge devices and in resource-limited environments, making cost-efficient models more attractive.

Future of Large Scale AI: Trends and Implications

The Next-Generation AI Models

The next wave of large models will go beyond just size, focusing on utility, efficiency, transparency, and expertise. In particular:

- Smaller, Smarter Models: Small language models (SLMs) with as few as 3 billion parameters delivering comparable performance.

- Multimodal Models: Capable of understanding text, images, video, and speech within a single framework.

- Open and Collaborative AI: The open-source movement accelerates innovation and reduces barriers to entry.

Industry Impact

- AI Democratization: Accessible, cost-effective models enable startups and researchers globally to innovate.

- AI Democratization: Industry giants leverage scale-out architectures to deliver smarter products.

- Sustainability: Efficient models reduce energy consumption, aligning AI development with sustainability goals.

- Market Competition: Companies race to build the most powerful and affordable models, fueling rapid innovation cycles.

Challenges and Ethical Considerations

Despite rapid advancements, scale is not the only metric for success. Ethical concerns surrounding AI transparency, potential biases, and responsible deployment become more pressing with increasing model capabilities.

- Bias and Fairness: Larger models trained on broad data sets risk amplifying biases unless carefully monitored.

- Energy Consumption: Massive models consume significant energy, raising sustainability issues.

- Intellectual Property: Open-source models like Ling-1T challenge existing IP frameworks, prompting regulatory debates.

- Security Risks: Larger models may be more vulnerable to adversarial attacks or misuse.

Final Thoughts

The competition between trillion-parameter models like Ant Group’s Ling-1T and cost-efficient giants like DeepSeek R1 signals a transformative phase in AI. Scale no longer solely determines capability; efficient, accessible, and ethical AI models are becoming equally crucial.

As AI continues to evolve, these technological advances will accelerate innovation, democratize access, and challenge both industry standards and societal norms. For developers, researchers, and enterprises, understanding these trends is essential to navigating the future of intelligent systems.